How Our Bible Chat Generates Answers

A Clear, Honest, and In-Depth Explanation for Users of the Christ Reigns Media Dashboard

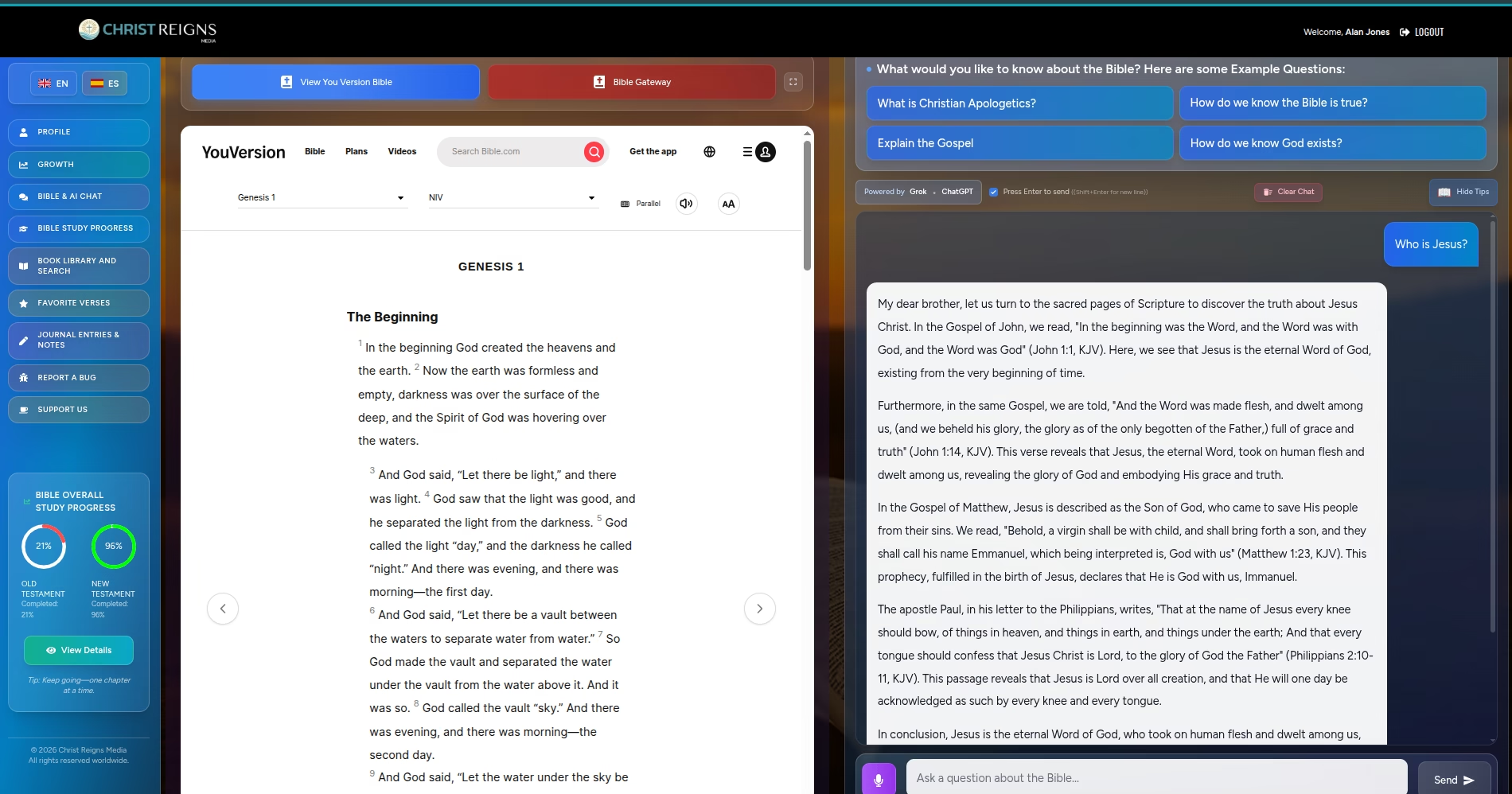

If you’ve ever typed a question into the Bible Chat and thought, “Is this AI just making things up?” or “Can I really trust what it says about Scripture?” — you’re asking the right questions. That healthy skepticism is exactly why we’re pulling back the curtain.

This isn’t a black box. It’s advanced technology, built with intention, and guided by a clear theological framework. Here’s exactly how it works — from the moment you hit “Send” until a thoughtful, biblically grounded answer appears on your screen.

The Two Partners Behind Every Answer

Every response in Bible Chat is powered by two distinct technologies working in perfect sync:

Think of it like this:

Llama 3.1 is a highly trained mind that has read billions of pages of text, including the entire Bible in multiple translations, theological commentaries, sermons, and Christian scholarship. Groq is the specialized racetrack and pit crew that lets that mind think at lightning speed.

Together, they deliver answers in under a second — something that would take 10–20 seconds on ordinary hardware.

What Is Llama 3.1?

Llama 3.1 (developed by Meta) is one of the most capable open-source AI models in the world. The version we use is the 8B model — 8 billion parameters (think of parameters as the model’s “brain cells”).

For context:

- GPT-3.5 had ~175 billion parameters

- GPT-4 is estimated at over a trillion

- Llama 3.1 8B is deliberately smaller and optimized for speed and clarity

It was trained on trillions of words from books, websites, code, academic papers — and yes, the Bible appeared many thousands of times in different translations (KJV, NIV, ESV, etc.).

Through years of training, it learned patterns: how verses connect, how doctrines are explained, how Christians talk about faith. It doesn’t “know” the Bible like a human scholar — it has compressed that knowledge into mathematical relationships inside its 8 billion parameters.

What Makes Groq So Fast?

Running an AI model is incredibly demanding. A single response can require billions of calculations.

Traditional GPUs struggle with this. Groq solved the problem by building custom hardware called the LPU (Language Processing Unit) — chips designed from the ground up for language models.

The result?

- Traditional setup: 5–15 seconds per answer

- Groq: 0.5–1.5 seconds

It’s the difference between a regular car and a Formula 1 racer on a track built specifically for it.

How a Question Becomes an Answer (Step by Step)

Let’s walk through what happens when you ask, “Who is Jesus?”

1. Your Question Is Prepared

Your words are sent to Groq’s servers along with a hidden system prompt that you never see on the actual code programmatically sent with this specific instruction:

“You are a Protestant Bible scholar committed to the inerrancy, authority, and sufficiency of the Holy Scriptures.

You must answer all questions solely based on the Bible, using the King James Version (KJV) or (NIV).

This prompt is the single most important guardrail. It shapes every answer to stay faithful to Scripture. As any evangelical Jesus loving believer, I think we would be happy with this instruction.

2. Tokenization

The model doesn’t understand English — it understands numbers. Your question is broken into tokens (small pieces of words):

“Who is Jesus?” → [15546, 374, 10811, 30]

3. The Deep Thinking (32 Transformer Layers)

The tokens pass through 32 layers of neural networks. Each layer does something deeper:

- Early layers: Understand grammar and basic meaning

- Middle layers: Recognize “Jesus” as the central figure of Christianity

- Deep layers: Pull in theological knowledge, apply the system prompt, and plan a coherent response

This is where the magic of self-attention happens — the model weighs which words matter most to each other.

4. Word-by-Word Generation

The model generates the answer one token at a time, always predicting: “What is the most likely next word?”

It doesn’t write the whole answer at once. It builds it sequentially, checking back against everything it’s already said.

5. Temperature Control

We set the temperature to 0.3 — very low. This makes answers consistent and focused rather than creative or random. High temperature = more poetic but riskier. Low temperature = more reliable and biblically grounded.

Is the AI “Looking Up” Bible Verses?

No.

It is not searching a database in real time. It is not opening Bible software behind the scenes.

It is generating text based on patterns it learned during training. That’s why it can explain John 3:16 beautifully — but it can also occasionally misquote a reference if the patterns mislead it.

This is why we are transparent: Bible Chat is a study assistant, never a final authority.

Can It Be Wrong?

Yes. And we want you to know that.

The model can:

- Misquote a verse reference

- Slightly over-simplify complex doctrines

- Occasionally “hallucinate” (state something confidently that isn’t quite right)

This isn’t because it’s deceptive. It’s because it’s a prediction machine, not the Lord Jesus Christ who is omniscient. We should take that into consideration at all times, it’s a study tool and nothing more.

That’s why every answer is shaped by:

- The Protestant system prompt

- Low temperature for consistency

- Your responsibility to test everything against Scripture (Acts 17:11)

Why We Built It This Way

We chose Groq + Llama 3.1 because it gives us the best combination of:

| Speed | You shouldn’t wait 10 seconds to hear about Jesus |

| Cost | We can offer this free/affordable to everyone |

| Quality | Excellent for theology when properly guided |

| Open Source | Full control over the system prompt |

| Transparency | We can explain exactly how it works |

The Honest Bottom Line

Bible Chat is not:

- A replacement for the Holy Spirit

- A substitute for your local church

- An infallible teacher

Bible Chat is:

- A faithful, lightning-fast study companion

- A tool that makes deep Bible exploration more accessible

- A modern concordance + commentary + tutor — all in one

It is built by believers, for believers, with Scripture as the ultimate standard.

So go ahead. Ask it anything.

Then open your Bible and let the Word of God have the final say.

That’s exactly how we designed it to be used.

Truth does not come from AI. Truth comes from God’s Word.

This tool simply helps you engage with it more deeply, more quickly, and with greater joy.

Welcome to the future of Bible study — grounded in the ancient truth that never changes.